what is epoch in deep learning An epoch is a complete iteration through the entire training dataset in one cycle for training the machine learning model During an epoch Every training sample in the dataset is processed by the model and its weights and biases are updated in accordance with the computed loss or error

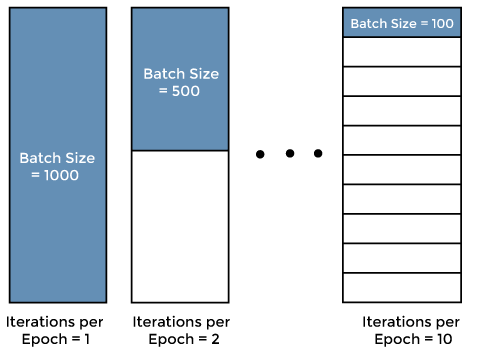

One epoch means that each sample in the training dataset has had an opportunity to update the internal model parameters An epoch is comprised of one or more batches For example as above an epoch that has one batch is called the batch gradient descent learning algorithm An epoch means training the neural network with all the training data for one cycle In an epoch we use all of the data exactly once A forward pass and a backward pass together are counted as one pass An epoch is made up of one or more batches where we use a part of the dataset to

what is epoch in deep learning

what is epoch in deep learning

https://i.pinimg.com/originals/02/19/2c/02192c665cfdd5da8c976d35ae62154f.jpg

Epoch Vs Iteration Vs Batch Vs Batch Size In Deep Learning In 2021

https://i.pinimg.com/originals/0b/a3/54/0ba35442e6aa1cd074a95f798ba837ef.jpg

What Is An Epoch Neural Networks In Under 3 Minutes YouTube

https://i.ytimg.com/vi/BvqerWSp1_s/maxresdefault.jpg

An epoch refers to one complete cycle of training the neural network with all the training data During an epoch the neural network undergoes a forward pass prediction and a backward pass error calculation and weight update using all the training examples Neural Network Training Convergence In the context of deep learning batch size epochs and training steps are called model hyperparameters that we need to configure manually In addition to that these are common

Epochs in machine learning refer to the number of times the entire dataset is passed through the model during training Why are Epochs Important Determining the Number of Epochs In Conclusion Increasing the number of epochs can improve the model s performance and accuracy Benefits of Increasing the Number of Epochs Overview In this tutorial we ll talk about three basic terms in deep learning that are epoch batch and mini batch First we ll talk about gradient descent which is the basic concept that introduces these three terms Then we ll properly define the terms illustrating their differences along with a detailed example 2 Gradient Descent

More picture related to what is epoch in deep learning

Qu Es Epoch En Machine Learning Comunidad Huawei Enterprise

https://forum.huawei.com/enterprise/es/data/attachment/forum/202209/26/232100fuusmpjl2isopjwo.png

What Is An Epoch In Deep Learning AI Chat GPT

https://aichatgpt.co.za/wp-content/uploads/2023/02/What-is-an-epoch-in-deep-learning_1-600x400.jpg

Learning In Deep Neural Networks And Brains With Similarity weighted

https://www.pnas.org/cms/asset/d21aa3fc-7002-442c-b067-2f7ecbd22d80/keyimage.jpg

Epochs One Epoch is when an ENTIRE dataset is passed forward and backward through the neural network only ONCE Since one epoch is too big to feed to the computer at once we divide it in several smaller batches Why we An epoch is completed when the learning algorithm has trained on every batch from the dataset once It s a full cycle through the data and it s crucial for understanding how well the model is

Definitions We split the training set into many batches When we run the algorithm it requires one epoch to analyze the full training set An epoch is composed of many iterations or Epoch Iteration Batch Size What does all of that mean and how do they impact training of neural networks I describe all of this in this video and I also

04 Neural Network Epoch

https://wikidocs.net/images/page/180544/forward_backward.png

AI Basics Accuracy Epochs Learning Rate Batch Size And Loss YouTube

https://i.ytimg.com/vi/vGYcWvYnXiI/maxresdefault.jpg

what is epoch in deep learning - Overview In this tutorial we ll talk about three basic terms in deep learning that are epoch batch and mini batch First we ll talk about gradient descent which is the basic concept that introduces these three terms Then we ll properly define the terms illustrating their differences along with a detailed example 2 Gradient Descent