spark sql round to 2 decimal places However I want the values to be rounded to 2 digit after the decimal like 2 35 1 55 before summing it How can I do it I was not able to find any sub function like sum round of function sum Note I am using Spark 1 5 1 version scala apache spark

I have this command for all columns in my dataframe to round to 2 decimal places data data withColumn columnName1 func round data columnName1 2 I have no idea how to round all Dataframe by the one command not every column separate Pyspark sql functions round col ColumnOrName scale int 0 pyspark sql column Column Round the given value to scale decimal places using HALF UP rounding mode if scale 0 or at integral part when scale 0

spark sql round to 2 decimal places

spark sql round to 2 decimal places

https://s2.studylib.net/store/data/018236056_1-73aea5cb3f9634e8b4d88943815959ea-768x994.png

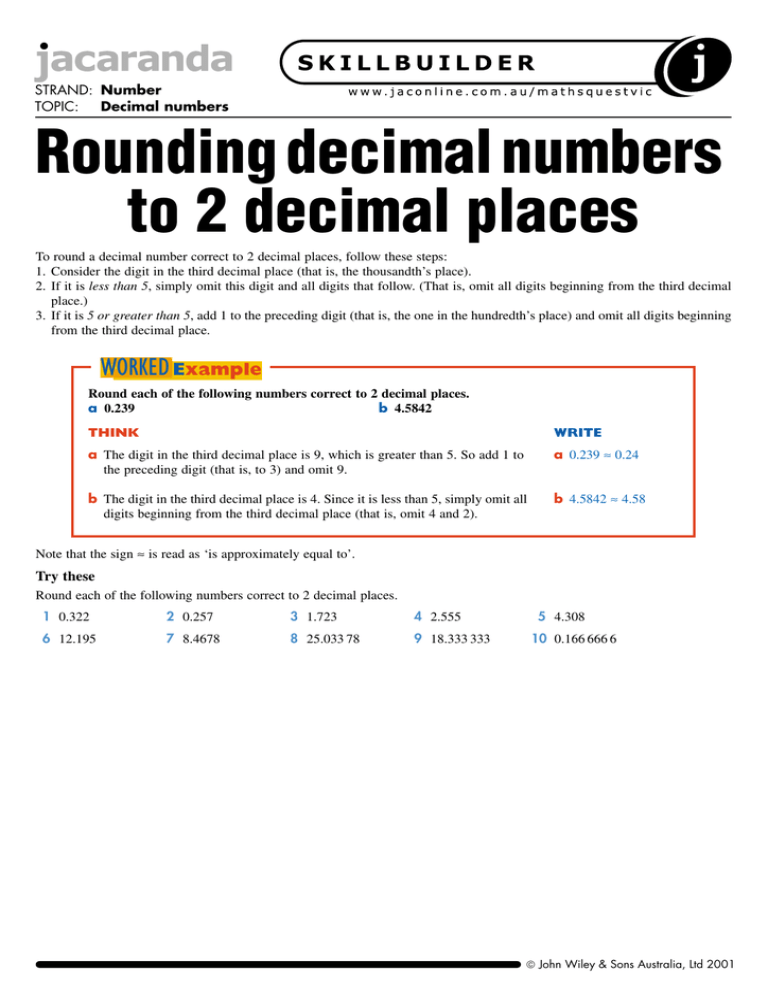

Rounding Numbers decimals BBC Bitesize

https://ichef.bbci.co.uk/images/ic/1008xn/p0bqp7nz.png

How To Round To 2 Decimal Places In Python

https://www.freecodecamp.org/news/content/images/size/w2000/2022/08/round-up-numbers-1.png

You can use the following syntax to round the values in a column of a PySpark DataFrame to 2 decimal places from pyspark sql functions import round create new column that rounds values in points column to 2 decimal places df new df withColumn points2 round df points 2 Func round col new bid 2 alias bid the new bid column here is of type float the resulting dataframe does not have the newly named bid column rounded to 2 decimal places as I am trying to do rather it is still 8 or 9 decimal places out

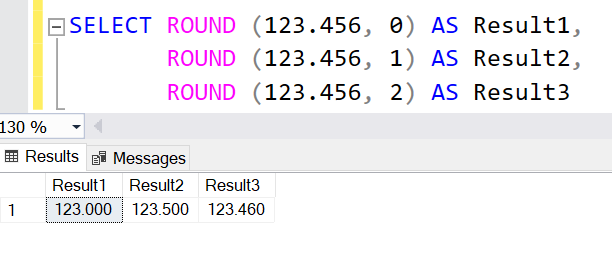

To round a single value to 2 decimal places in PySpark you can use the round function The round function takes two arguments the value to be rounded and the number of decimal places to round to Learn the syntax of the round function of the SQL language in Databricks SQL and Databricks Runtime

More picture related to spark sql round to 2 decimal places

How To Round To 2 Decimal Places In Python Datagy

https://datagy.io/wp-content/uploads/2022/10/How-to-Round-to-2-Decimal-Places-in-Python-Cover-Image.png

SQL T SQL Round To Decimal Places YouTube

https://i.ytimg.com/vi/n9qhOfAPoUQ/maxresdefault.jpg

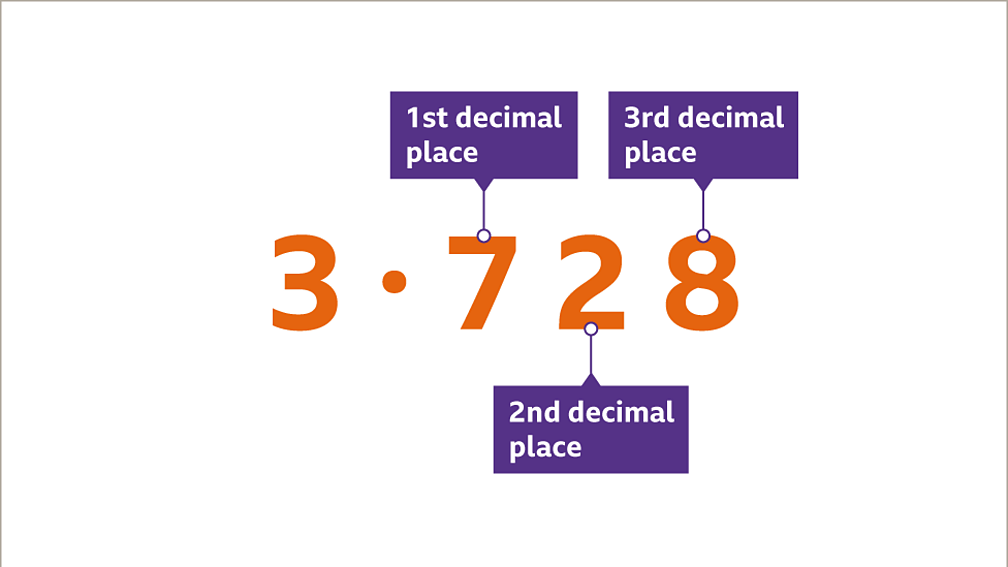

2 Decimal Places

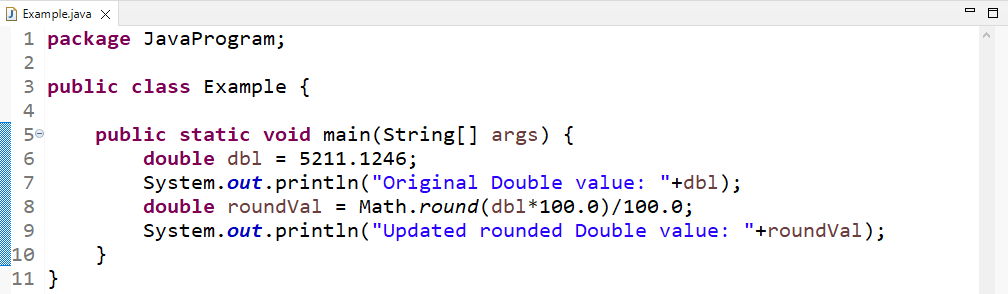

https://linuxhint.com/wp-content/uploads/2022/09/How-to-Round-a-Double-to-two-Decimal-Places-in-Java-1.png

Round off in pyspark using round function Syntax round colname1 n colname1 Column name n round to n decimal places round Function takes up the column name as argument and rounds the column to nearest integers and the resultant values are stored in the separate column as shown below 1 2 3 To Round up a column in PySpark we use the ceil function And to round down a column in PySpark we use the floor function And to round off to a decimal place in PySpark we use the round function

PySpark SQL Functions round method rounds the values of the specified column Parameters 1 col string or Column The column to perform rounding on 2 scale int optional If scale is positive such as scale 2 then values are rounded to Spark 1 5 2 You can simply use the format number col d function which rounds the numerical input to d decimal places and returns it as a string In your case raw data raw data withColumn LATITUDE ROUND format number raw data LATITUDE 3

Sql Server Floor To 2 Decimal Places Two Birds Home

https://www.tutorialsteacher.com/Content/images/sqlserver/round-func1.png

How To Round To 2 Decimal Places In JavaScript

https://timmousk.com/wp-content/uploads/2022/03/cover-4.png

spark sql round to 2 decimal places - To discretize or round the scores to the nearest decimal place as specified you can use the round function in Scala Spark SQL Here s an example code snippet that demonstrates how to achieve this import org apache spark sql functions