is 10 bit pixel format good By providing a higher color depth AMD s 10 bit pixel format enhances the overall image quality making it particularly beneficial for professionals in fields such as

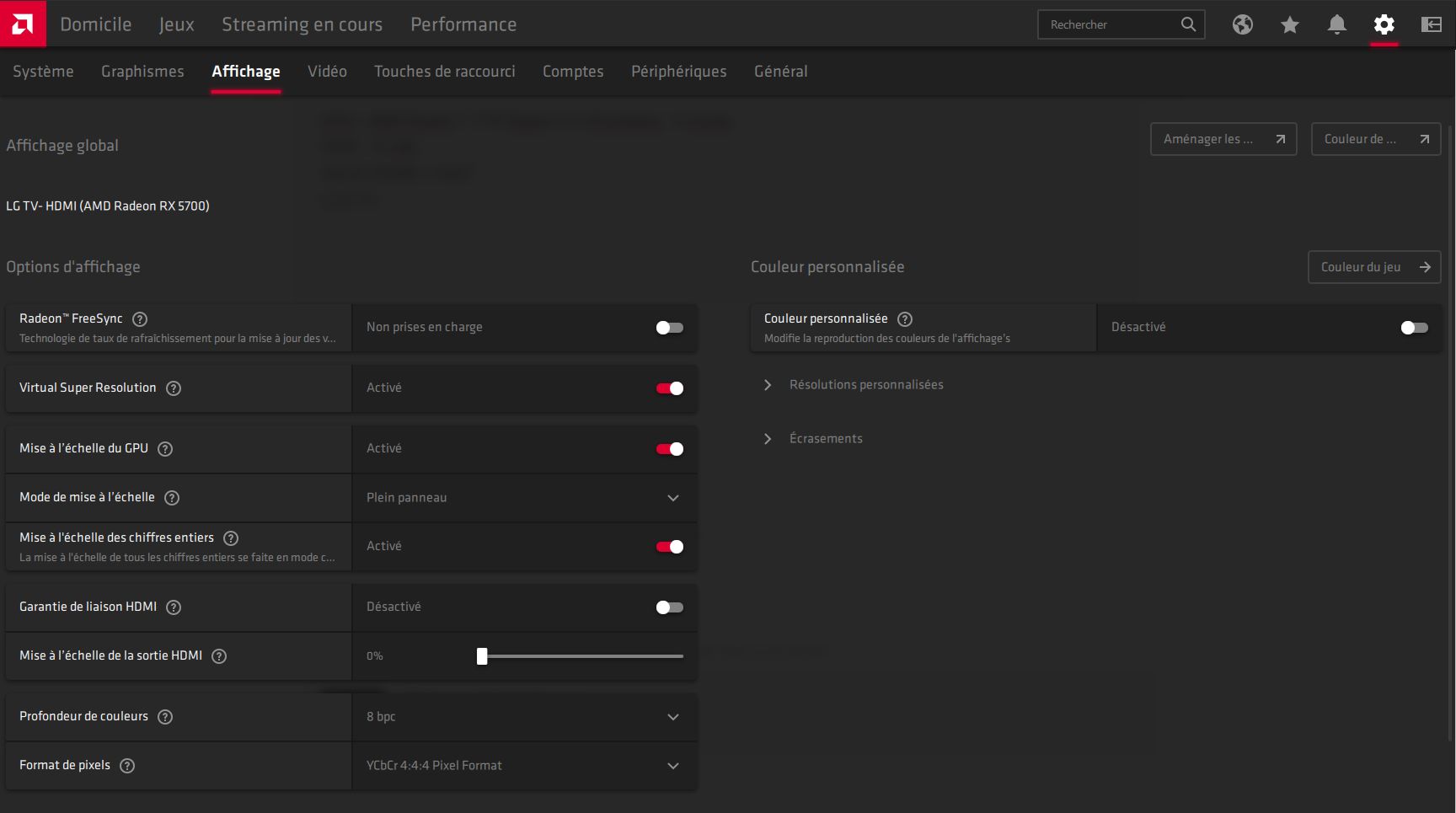

I guess it s always possible although it shouldn t all parts monitor cable GPU are supposed to be displayport 1 4 My Display settings right now are 4K 1 35 Aug 8 2023 1 Hi in the AMD Adrenalin driver I see that there are two separate options for 10 Bit Color Depth and 10 Bit Pixel Format and I was wondering which one I

is 10 bit pixel format good

is 10 bit pixel format good

https://cdn.shopify.com/s/files/1/1378/3337/articles/why_10-bit_1024x1024.jpg?v=1601294394

8 Bit Vs 10 Bit For Video What s The Difference MPB YouTube

https://i.ytimg.com/vi/R9hQYZbPjR4/maxresdefault.jpg

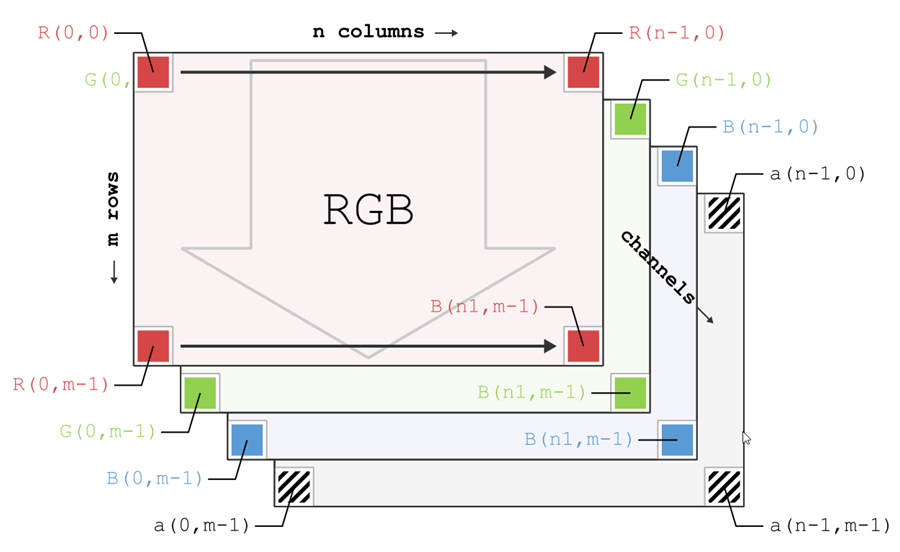

Color Pixel Formats

https://www.1stvision.com/cameras/IDS/IDS-manuals/en/images/readout-sequence-color-image.png

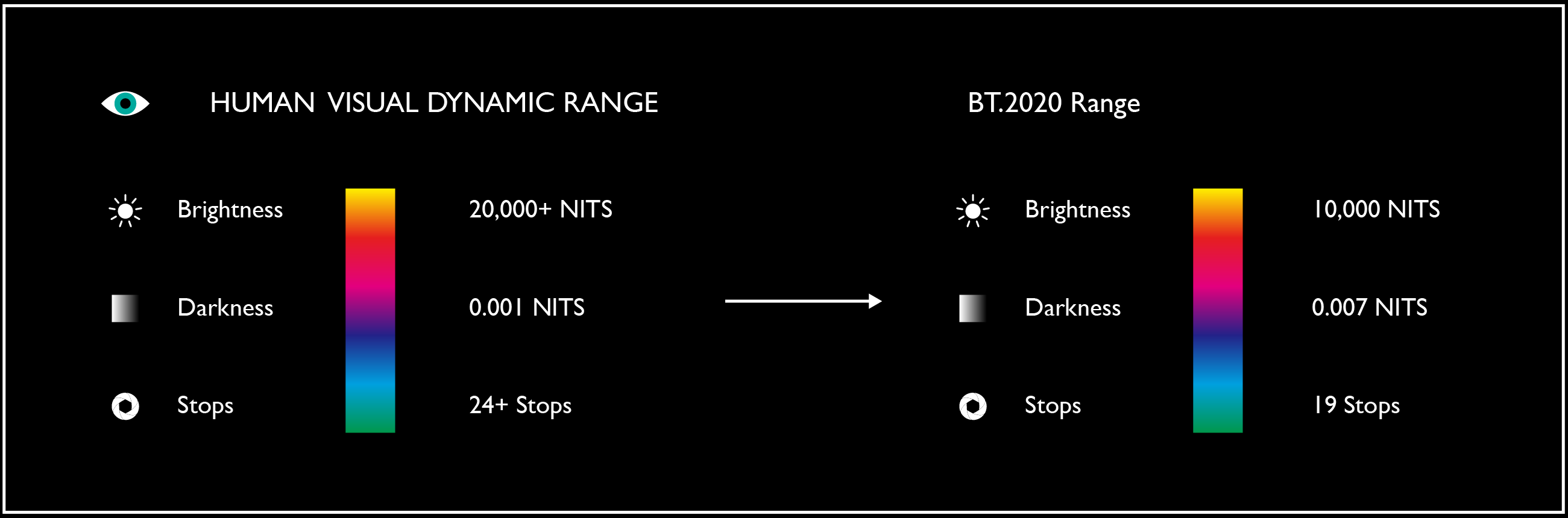

So 12 bit 10 bit 8 bit RGB YCbCr 4 4 4 YCbCr 4 2 2 YCbCr 4 2 0 YCbCr 4 2 0 is acceptable for watching movies sometimes ok for playing games there s Since I m already selecting 10 bit color depth with 4 4 4 full RGB HDR 23hz signal for movies and 10 bit color depth 4 2 2 ycbcr HDR 60hz signal for games I am wondering

A 10 bit value can store values between 0 and 1023 i e 1024 different values levels 256 3 16777216 16 7 million colors and 1024 3 1073741824 1 07 billion colors So a 10 bit panel has the ability to render images with exponentially greater accuracy than an 8 bit screen A 12 bit monitor goes further with 4096 possible versions of each primary per pixel or 4096 x

More picture related to is 10 bit pixel format good

Enable 10 Bit Pixel Format In Radeon Adrenaline Disable Windows HDR

https://www.overclock.net/attachments/1664997366843-png.2574664/

10 Bit Pixel Format Support Adalah Beinyu

https://www.benq.com/content/dam/b2c/en-us/knowledge-center/bt2020/bt2020-3.png

BlackMagic Decklink Output Pixel Format 10Bit Beginners

https://forum.derivative.ca/uploads/default/original/2X/4/4488bb5327028aa2717e87a7cbb0bc47dff512c0.jpeg

10 bit displays use 10 bits to represent each of the red green and blue color channels In other words each pixel is represented with 30 bits instead of the 24 bits used in However when I list the supported encoder settings using ffmpeg h encoder hevc nvenc output of command pasted below even though it supports

10 bit video 10 bit stills modes and the ability to shoot HEIF files are all features increasingly being added to cameras But what s the benefit and when should you use these modes We re going to look The Dolby Vision standard uses 12 bits per pixel which is designed to ensure the maximum pixel quality even if it uses more bits This covers the expanded range of luminance that is

Unreal 16 bit Rendering Instea Forum Aximmetry

https://aximmetry.blob.core.windows.net/forumimages/id1996_BDDDC23FF4D6A3A49A1B1A2AB9F349A5

Amd Setting Pixel Format Beinyu

https://community.amd.com/sdtpp67534/attachments/sdtpp67534/drivers-and-software-discussions/7100/1/Answer.jpg

is 10 bit pixel format good - Since I m already selecting 10 bit color depth with 4 4 4 full RGB HDR 23hz signal for movies and 10 bit color depth 4 2 2 ycbcr HDR 60hz signal for games I am wondering