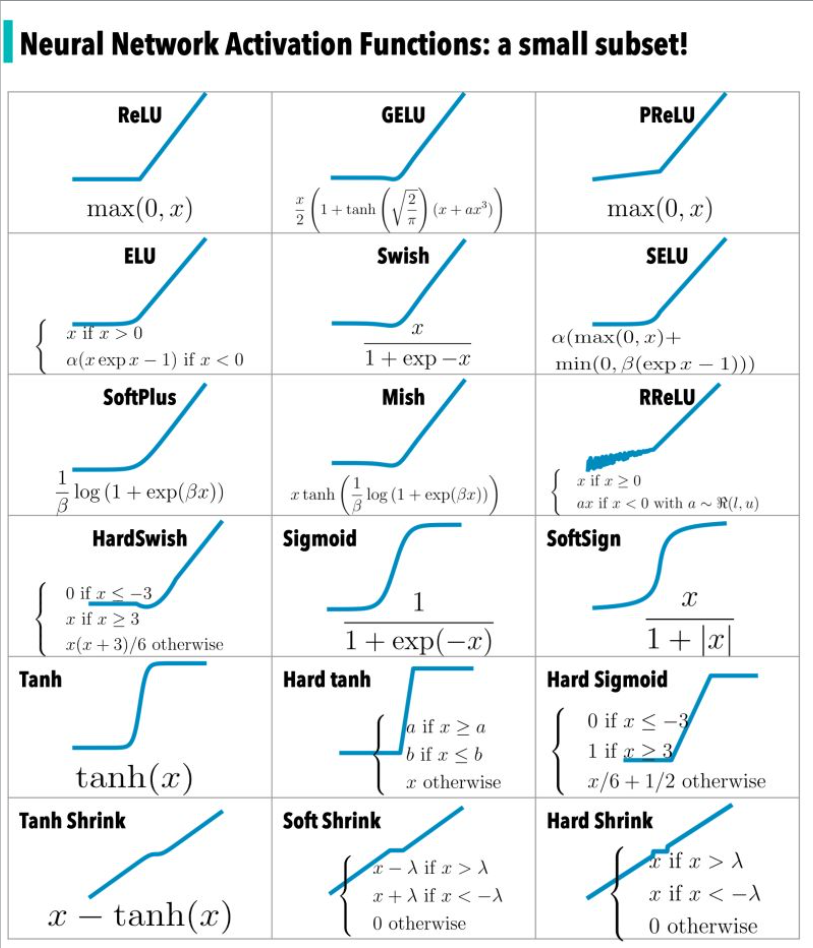

f x max 0 x activation function ReLU defined as f x max 0 x stands as one of the most popular activation functions It introduces sparsity by setting negative values to zero making it computationally

ReLU stands for Rectified Linear Unit The function is defined as f x max 0 x which returns the input value if it is positive and zero if it is negative Our function accepts a single input x and returns the maximum of either 0 or the value itself This means that the ReLU function introduces non linearity into our network by letting positive values pass through it unaffected

f x max 0 x activation function

f x max 0 x activation function

https://www.researchgate.net/profile/Domenico-Bloisi/publication/320733432/figure/fig3/AS:556227689627649@1509626423290/The-ReLU-f-x-max0-x-and-the-Logistic-function-f-x.png

Solved Given The Values Of The Linear Functions F x And G x In The

https://www.coursehero.com/qa/attachment/35518674/

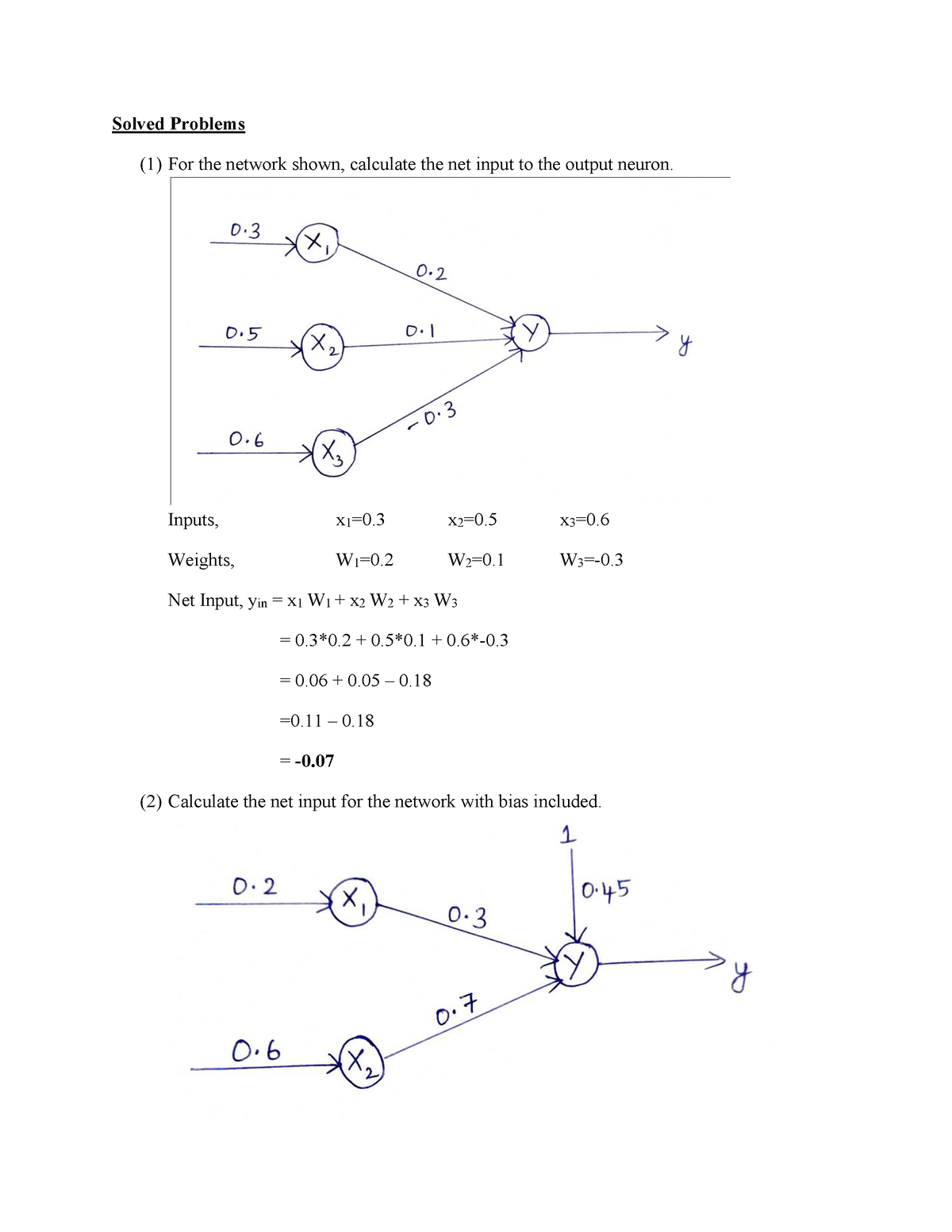

Activation Functions Solved Problems Solved Problems 1 For The

https://d20ohkaloyme4g.cloudfront.net/img/document_thumbnails/39b02a0720a781135aaba8fbc2c19748/thumb_1200_1553.png

F x text max 0 x Where x is the input to the neuron The function returns x if x is greater than 0 If x is less than or equal to 0 the function returns 0 In mathematical ReLU ReLU Rectified Linear Unit is defined as f x max 0 x This is a widely used activation function especially with Convolutional Neural networks It is easy to compute and does not saturate and does not cause the

When building your Deep Learning model activation functions are an important choice to make In this article we ll review the main activation functions their implementations in Python and advantages disadvantages of each ReLU x max 0 x In English if positive return x if negative return 0 This is one of the simplest non linearity possible computing the max function is as simple as it can get

More picture related to f x max 0 x activation function

Aman s AI Journal Primers Activation Functions

https://aman.ai/primers/ai/assets/activation/1.png

Find Local Max And Min Values Of F X 2 x 1 By 2 methods First And

https://i.ytimg.com/vi/4t5RduAI-5I/maxresdefault.jpg

Suatu Fungsi F Dirumuskan Sebagai F x 3x 2 Dengan Daerah Asal

https://peta-hd.com/wp-content/uploads/2022/10/Suatu-fungsi-f-dirumuskan-sebagai-fx-3x-–-2-dengan-daerah-asal-adalah-A-–2-–1-0-1-2.jpg

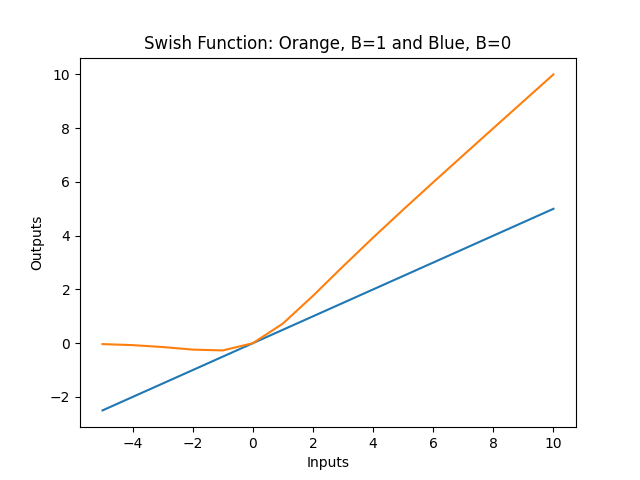

A Rectified Linear Unit is a form of activation function used commonly in deep learning models In essence the function returns 0 if it receives a negative input and if it receives a positive value the function will return back the same As beta rightarrow infty f x rightarrow max 0 x or f x acts as ReLU activation function This suggests that the swish activation function can be loosely viewed as

Instead of being 0 when x 0 a leaky ReLU allows a small non zero constant gradient Normally 0 01 Hence the function could be written as f x max x x ReLU The ReLU function is the Rectified linear unit It is the most widely used activation function It is defined as f x max 0 x f x max 0 x Graphically The main

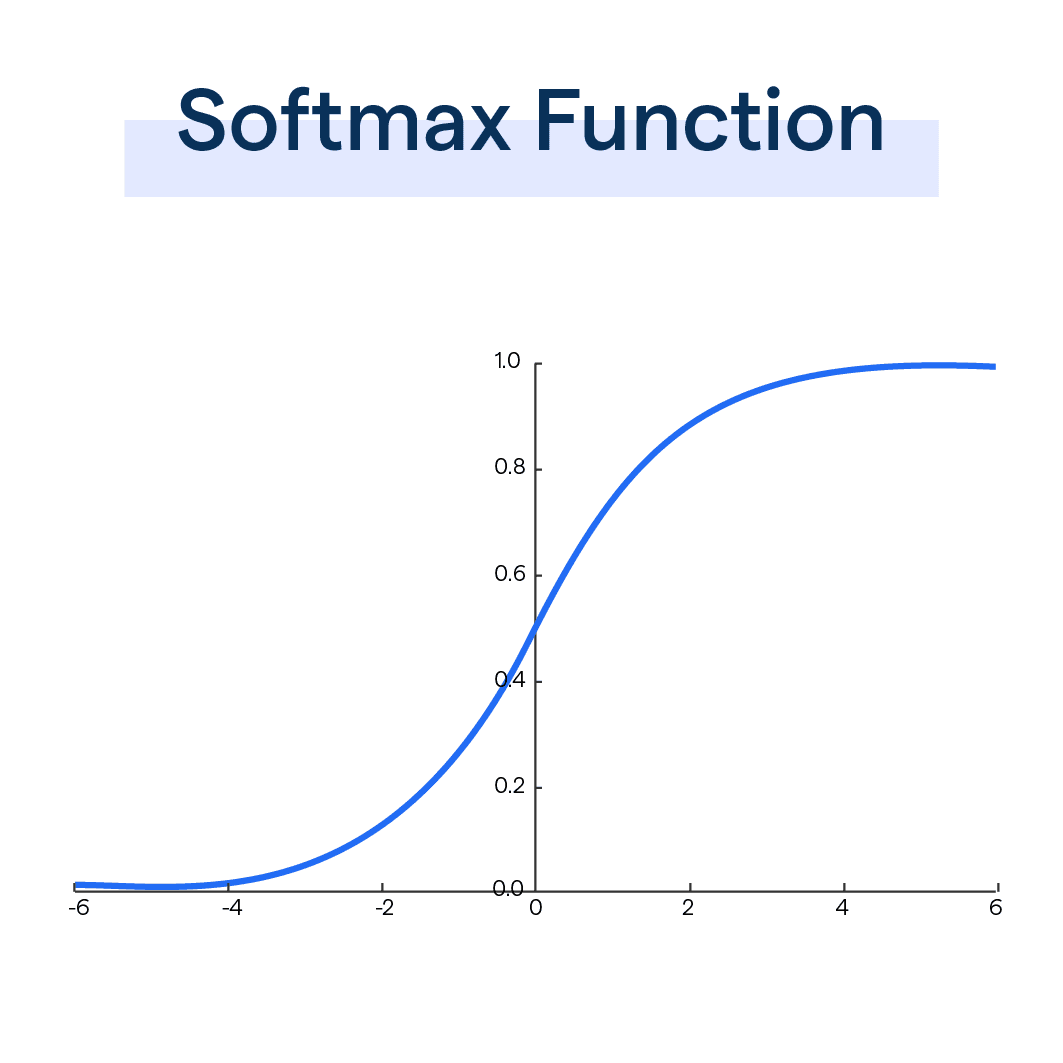

Softmax Function Advantages And Applications BotPenguin

https://ik.imagekit.io/botpenguin/assets/website/Softmax_Function_07fe934386.png

Swish Activation Function

https://iq.opengenus.org/content/images/2021/12/Swish.png

f x max 0 x activation function - Why are activation functions of rectified linear units ReLU considered non linear f x max 0 x They are linear when the input is positive and from my understanding to unlock the